Generalizing from Training Data

Prerequisites:

You do not need to have attended the earlier talks. If you know zero math and zero machine learning, then this talk is for you. Jeff will do his best to explain fairly hard mathematics to you. If you know a bunch of math and/or a bunch machine learning, then these talks are for you. Jeff tries to spin the ideas in new ways.

Longer Abstract:

There is some theory. If a machine is found that gives the correct answers on the randomly chosen training data without simply memorizing, then we can prove that with high probability this same machine will also work well on never seen before instances drawn from the same distribution. The easy proof requires D>m, where m is the number of bits needed to describe your learned machine and D is the number of train data items. A much harder proof (which we likely won't cover) requires only D>VC, where VC is VC-dimension (Vapnik–Chervonenkis) of your machine. The second requirement is easier to meet because VC<m.

Date and Time

Location

Hosts

Registration

-

Add Event to Calendar

Add Event to Calendar

Loading virtual attendance info...

Speakers

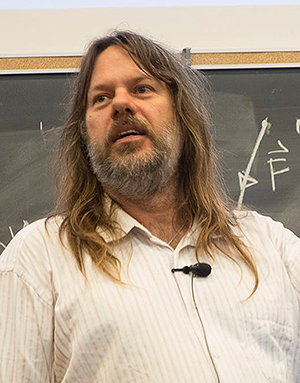

Prof. Jeff Edmonds

Prof. Jeff Edmonds

Biography:

Jeff Edmonds has been a computer science professor at York since 1995, after getting his bachelors at Waterloo and his Ph.D. at University of Toronto. His back ground is theoretical computer science. His passion is explaining fairly hard mathematics to novices. He has never done anything practical in machine learning, but he is eager to help you understand his musings about the topic.

https://www.eecs.yorku.ca/~jeff/