Reinforcement Learning Game Tree / Markoff Chains

Prerequisites:

You do not need to have attended the earlier talks. If you know zero math and zero machine learning, then this talk is for you. Jeff will do his best to explain fairly hard mathematics to you. If you know a bunch of math and/or a bunch machine learning, then these talks are for you. Jeff tries to spin the ideas in new ways.

Longer Abstract:

At the risk of being non-standard, Jeff will tell you the way he thinks about this topic. Both "Game Trees" and "Markoff Chains" represent the graph of states through which your agent will traverse a path while completing the task. Suppose we could learn for each such state a value measuring "how good" this state is for the agent. Then competing the task in an optimal way would be easy. If our current state is one within which our agent gets to choose the next action, then she will choose the action that maximizes the value of our next state. On the other hand, if our adversary gets to choose, he will choose the action that minimizes this value. Finally, if our current state is one within which the universe flips a coin, then each edge leaving this state will be labeled with the probability of taking it. Knowing that that is how the game is played, we can compute how good each state is. The states in which the task is complete is worth whatever reward the agent receives in the said state. These values somehow trickle backwards until we learn the value of the start state. The computational challenge is that there are way more states then we can ever look at.

Date and Time

Location

Hosts

Registration

-

Add Event to Calendar

Add Event to Calendar

Loading virtual attendance info...

Speakers

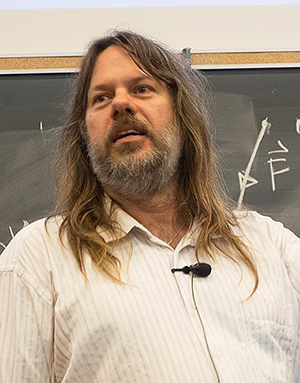

Prof. Jeff Edmonds

Prof. Jeff Edmonds

Biography:

Jeff Edmonds has been a computer science professor at York since 1995,

after getting his bachelors at Waterloo and his Ph.D. at University of Toronto. His back ground is theoretical computer science. His passion is explaining fairly hard mathematics to novices. He has never done anything practical in machine learning, but he is eager to help you understand his musings about the topic.

https://www.eecs.yorku.ca/~jeff/