2023 IEEE Western New York Image and Signal Processing Workshop: A.I - Capabilities & limitations, Ethical risks, Uses, and Accountability in Healthcare

2023 IEEE Western New York Image and Signal Processing Workshop

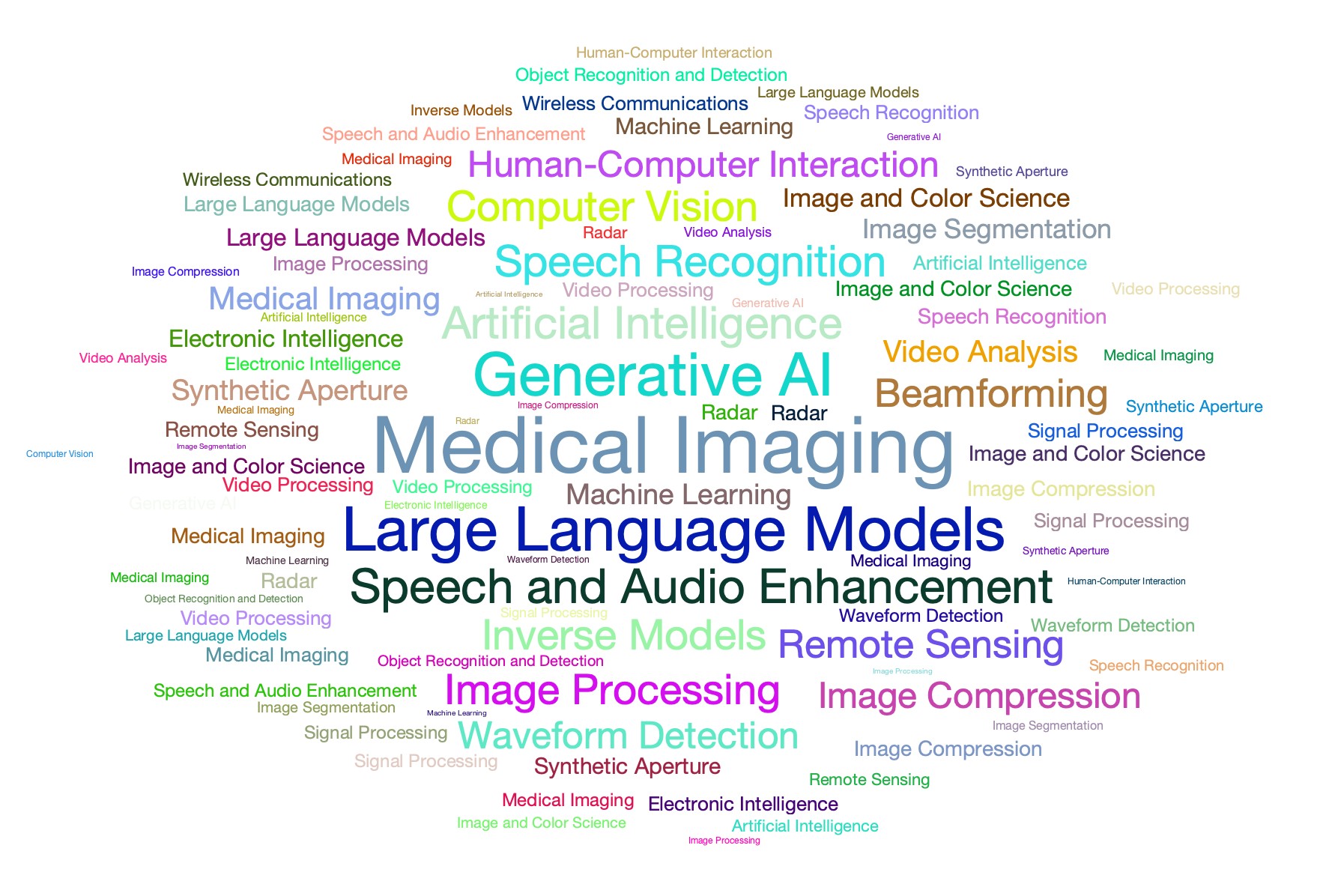

The 2023 Western New York Image and Signal Processing Workshop (WNYISPW) is a premiere venue for promoting research involving applications of AI, image and signal processsing research in the Western New York region and for facilitating iteraction between academic researchers, industry professionals, and students.

The workshop is organized as a morning session, a Poster session along with catered lunch, and an afternoon session.

Morning session:

Oral presentations covering a diverse set of submitted, reviewed and accepted research submissions.

Invited presentations on Neuroscience advances in analysis, image & signal processing, statistical, and experimental design techniques to uncover neural functions of the human brain and development of techniques for identifying anomalous neural behavioral patterns (neuromarkers) based on the acquisition and analysis of inherently noisy multi-dimensional signals.

Lunch.

Poster Session exhibiting a diverse set of submitted, reviewed and accepted research submissions.

Afternoon session:

Oral presentations covering a diverse set of submitted, reviewed and accepted research.

Invited presentation involving photonics, imaging and real-time computational processes.

Invited presentations on the capabilities, limitations, ethical risks, accountability, and guardrails of Large Language Models and Generative AI.

Panel discussion and debate on how Generative AI applications may be optimized to augment, rather than detract, from the human experience.

The workshop will be held in a hybrid in-person / virtual format on Friday, November 3rd, 2023 from 8:00 AM (check-in) to 5:30 PM at the RIT Student Development Center.

For details, see the workshop webpage at: https://ewh.ieee.org/r1/rochester/sp/WNYISPW2023.html

Awards will be given for best student paper and best student abstract.

Important Dates:

| July 5 | Paper and Poster submission opens |

| October 16 | Paper submission closes (Extended) |

| October 20 | Notification of Acceptance |

| October 25 | Submission of camera-ready paper and virtual poster files |

| November 3 | Workshop |

The Western New York Image and Signal Processing Workshop is sponsored by the Rochester Section of the IEEE, the Rochester Chapter of the IEEE Signal Processing Society, IEEE Computer Society and IEEE Photonics Society, in technical cooperation with the Rochester Chapter of the Society for Imaging Science and Technology.

The Western New York Image and Signal Processing Workshop is supported by Vanteon Wireless Solutions.

Date and Time

Location

Hosts

Registration

- Date: 03 Nov 2023

- Time: 03:15 PM to 04:30 PM

- All times are (UTC-04:00) Eastern Time (US & Canada)

-

Add Event to Calendar

Add Event to Calendar

- Rochester Institute of Technology

- Briggs Place

- Rochester, New York

- United States 14623

- Building: Student Development Center

- Room Number: Conference Room

- Click here for Map

- Contact Event Hosts

- Co-sponsored by Rochester Section, Region 1

Agenda

Dr. Christopher Kanan: The Current Capabilities and Limitations of Generative AI

Abstract: Generative AI systems are now being used across all sectors of the economy. They have enabled fundamental advances in editing and drafting documents, programming, creating art, chat bots, automation, and much more. They have democratized skills that previously required years of training, and can greatly enhance the productivity of individuals and organizations. These systems have been dubbed “Foundation Models” because they are extremely large AI systems that can be used for many tasks, where these systems learn through self-supervised learning objectives. In this talk, I describe how some of the most influential systems work. I describe their current capabilities and limitations, and discuss some of the missing ingredients needed to further advance them.

Dr. Jonathan Herington: High-Level Ethical Risks for Generative AI

Abstract: The rapid advancement of generative AI poses exciting possibilities but also raises critical ethical challenges around what it means to be a human being . There has been much focus on familiar ethical risks: (1) many models train on massive datasets scraped without consent, potentially undermining individual privacy and profiting off of public datasets, (2) training data reflects societal prejudices which can propagate unfair outputs that marginalize vulnerable groups, and (3) as systems become more autonomous, unpredictable behaviors beyond developer intentions could emerge. These risks are real and immediate, but generative models also raise more existential ethical questions: what does it mean to “create” something in the context of generative AI? Should we worry about the atrophy of creative and analytic skills in the face of tools that can do those tasks for most of us? What is it that humans are distinctively good at in a world with advanced generative models? In this talk, I explore a simple philosophical framework for thinking about these risks that focuses on (i) promoting human welfare, (ii) respecting human autonomy, and (iii) justly distributing the benefits and burdens of these systems

Dr. Andrew D. White: Language is the future of Chemistry

Abstract: Large language models (LLMs) are beginning a new era of chemistry, where LLMs can connect and reason about experiments, tools, and databases to accomplish open-ended tasks in chemistry. This is creating both new opportunities in accelerating chemistry, but also increasing the challenge of interpretation of model outputs. I will summarize our recent work on applying LLMs to authentic chemical challenges, their ability to use tools, access scientific literature, and how we can force them to explain themselves.

Dr. Kathleen Fear: Accountability and Generative AI in Healthcare

Abstract: This presentation will explore the transformative potential of large language models (LLMs) and generative AI tools in addressing problems within the healthcare sector. The focus will be on leveraging these advanced technologies to streamline time-consuming procedures, reduce administrative burden, and enhance patient care. This talk will delve into how to effectively identify the appropriate problem spaces for the application of generative AI, with an emphasis on minimizing risks and ensuring the utmost safety and privacy for patients. Furthermore, we will touch on the ethical, regulatory, and accountability issues that come with the implementation of AI in healthcare.