IEEE SCV WIE AI Summit 2025

IEEE SCV WIE AI Summit 2025 - Generative AI, LLMs, Vision Language Models(VLMs), Retrieval-Augmented Generation (RAG) and more

IEEE SCV WIE AI Summit 2025

In an era where AI technologies are rapidly transforming industries and redefining possibilities, it is crucial to explore both the innovations driving this change and the responsibilities that come with it. Today, we will delve into a diverse array of topics that highlight the multifaceted nature of AI and its profound impact on our lives.

Our sessions will cover the latest developments in Large Language Models and Foundation Models, exploring efficient fine-tuning, multilingual adaptation, and the role of LLMs as knowledge bases. We will also examine the evolution of AI agents, focusing on autonomous task completion, multi-agent collaboration, and the integration of external knowledge for robust decision-making.

In the realm of Vision and Multimodality, we will explore the integration of text, image, and video understanding, as well as advanced techniques like zero-shot learning and self-supervised learning. Our discussions on MLOps for LLMs will provide insights into best practices for training, deploying, and evaluating large models.

We will also address the critical areas of Knowledge-Grounded Reasoning, On-Device Learning, and the ethical dimensions of AI, including bias mitigation, privacy preservation, and the detection of misinformation.

Talk tracks are broadly classified but not limited to,

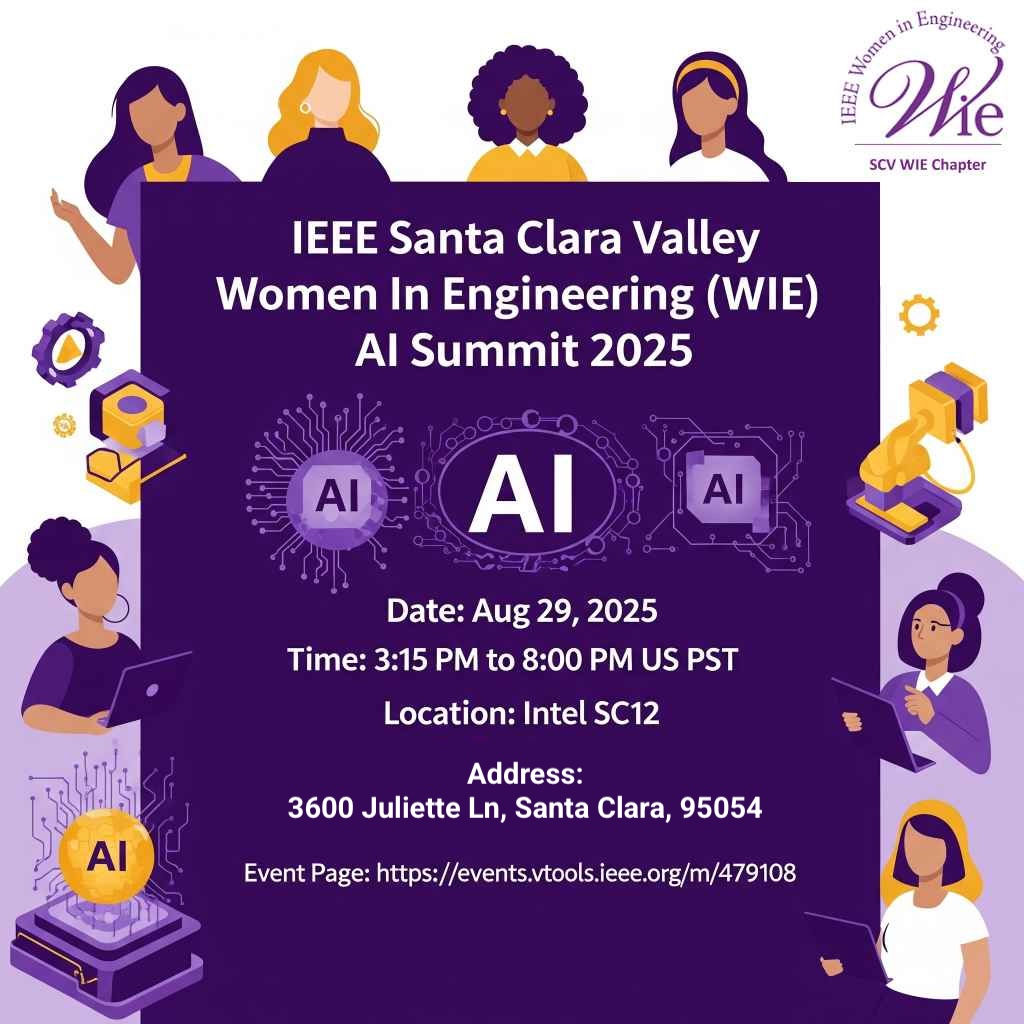

Date and Time

Location

Hosts

Registration

-

Add Event to Calendar

Add Event to Calendar

- Intel

- 3600 Juliette Ln, Santa Clara, CA 95054, US

- Santa Clara, California

- United States 95054

- Building: SC12

- Contact Event Hosts

-

Shweta Behere @ sbehere@ieee.org

Sue Delafuente @ sdelafuente@ieee.org

Ipsita Mohanty @ imohanty@ieee.org

Sreyashi Das @ das.sreyashi@gmail.com

Hazel Stoiber @ hazeldieh@ieee.org

- Starts 07 April 2025 07:00 AM UTC

- Ends 29 August 2025 07:00 AM UTC

- Admission fee ?

Speakers

Jagruti Mahante & Shreya Anand of Workday

The LLM Imperative: Secure Your AI Frontier Before It Fractures Your Enterprise

The rapid infiltration of Large Language Models (LLMs) into Enterprise workflows ignites a critical imperative - define and defend the ethical boundaries of their usage. This isn't just an internal challenge and extends across your entire third-party ecosystem creating an easy case for bias, data breaches, and ethical erosion.

This talk unveils a practical framework for enforcing ethical boundaries around LLM access in the Enterprise using Zero Trust methodologies. We will explore how structured access controls, contextual filtering, and usage logging can reduce risk without stifling innovation. But remember, when it comes to AI, it’s not the bot that’s scary - it’s what the bot knows!

We will illuminate the path to cross-functional synergy – uniting security, legal, compliance, and corporate IT to forge a unified definition and operationalization of "ethical access" — especially in environments where sensitive data flows between devices, SaaS platforms, and AI systems. Attendees will leave with a blueprint for responsibly enabling LLM-powered tools while maintaining enterprise trust and governance across the full digital supply chain - because when it comes to AI, don't just trust the bot, trust the boundaries you set.

Biography:

Jagruti Mahante is a Senior Manager, Third-Party Security at Workday within Enterprise Security with expertise in Security Risk Assessment and Management especially Third-Party Risk Management in an enterprise environment. As a leader in cybersecurity, third-party risk, and compliance, I’m passionate about bringing people together to make the right decisions for managing risk. To meet business objectives, we have to take risks -- however; with the right people, processes and technology -- we can respond to risk in a way that is secure and best fits the situation

Shreya Anand is a seasoned Cyber Security Professional with over 17 years of dedicated experience in all aspects of Security, Engineering, and Operations. She has earned a reputation as a results-oriented leader with a steadfast commitment to protecting the digital world. Her track record includes various roles in threat modeling, risk evaluation and mitigation, enterprise tool operations, and the implementation of cutting-edge security solutions across the Enterprise. Shreya is deeply passionate about mentoring the next generation of cybersecurity professionals, particularly focusing on women and youth and actively works to bridge the gender gap in Cybersecurity. Shreya’s leadership style is characterized by a strategic mindset and thrives in challenging environments to consistently deliver results that exceed expectations.

Jesmin Jahan Tithi of Intel

Designing Data Centers for Next-Gen Language Models

The rapid expansion of Large Language Models (LLMs), such as GPT-4 with 1.8 trillion parameters, necessitates innovative data center designs to enhance efficiency and scalability. My research explores architectures tailored for next-generation LLMs, focusing

on compute, memory, storage, and network components. We highlight the transformative impact of high-speed, low-latency networks, particularly Fullflat (equal bandwidth between any two nodes in the network) configurations, in addressing scalability challenges. Our sensitivity analyses reveal the benefits of overlapping compute and communication, hardware-accelerated collective communication, and strategic memory management in optimizing performance. By comparing sparse and dense models, we demonstrate the adaptability of data centers to diverse LLM architectures. We provide insights into optimization strategies that maximize Model FLOPS Utilization (MFU). Our findings offer a

roadmap for developing data centers capable of efficiently deploying complex models, ensuring continued advancements in AI capabilities. This research serves as a guide for co-designing data center solutions that meet the evolving demands of LLMs.

Biography:

Email:

Harika Rama Tulasi Karatapu of Google

AI Powered SaaS on GCP

This presentation explores the integration of Artificial Intelligence (AI) into Software-as-a-Service (SaaS) applications built on Google Cloud Platform (GCP). It highlights the evolution from traditional SaaS to AI-powered solutions characterized by hyper-personalization, intent-driven workflows, and outcome-based automation. Architecting multi-tenant AI SaaS on GCP necessitates careful planning around data security, tenant isolation, model selection (leveraging tools like Vertex AI), and cost optimization per tenant.

Key components discussed include Large Language Models (LLMs) and common design patterns like Retrieval-Augmented Generation (RAG) for incorporating external knowledge and fine-tuning for domain specialization. The presentation outlines GCP's building blocks, such as Vertex AI, Kubernetes Engine, AlloyDB, and TPUs, integrated within a SaaS control plane managing tenant onboarding, identity, metering, and tiered experiences (Basic, Premium, Enterprise).

Monetization strategies for AI-powered SaaS are addressed, covering challenges like accurate usage prediction and communicating AI value. Hybrid and tiered pricing models are suggested to balance subscription fees with usage-based charges for AI features. Finally, the Google Cloud SaaS Architecture is presented as a resource to help Independent Software Vendors (ISVs) design, build, and monetize their SaaS offerings on GCP.

Biography:

Harika Karatapu is a Network Solutions Architect with over 10 years of experience in cloud and traditional networking. With a strong technical foundation for Networks and Security, she specializes in designing and implementing secure, high-performance networking solutions across Google Cloud Platform (GCP) and Amazon Web Services (AWS).

Currently a Networking Specialist, Customer Engineer at Google LLC, Harika architects cloud-native and hybrid networking solutions for enterprise clients, optimizing cost, performance, and security. She collaborates with C-suite executives on cloud adoption strategies, leads the Network Architecture for Google Cloud along with AI powered SaaS, and is a Technical Accelerator mentor for Google Cloud Startups.

Prior to Google, she honed her expertise at Juniper Networks, Amazon Web Services, and Infosys, working on network architecture, troubleshooting, automation, and security. A JNCIE-ENT, JNCIE-DC certified Juniper expert.

Email:

Pallishree Panigrahi of Amazon

Designing for Trust: Responsible AI from Data to Deployment

As artificial intelligence becomes more woven into our daily routines, whether it’s recommending what we watch, helping doctors diagnose illnesses, or automating business decisions the question of trust becomes more important than ever. How do we make sure these powerful systems are not just smart, but also fair, transparent, and respectful of our privacy?

This presentation takes a practical look at what it really means to build responsible AI. I’ll talk about the importance of ethical guidelines that go beyond just code and data, and why it’s crucial to involve not only engineers, but also ethicists, legal experts, and everyday people who are affected by these technologies.

One of the biggest challenges is how we handle data. AI systems are only as good as the information we feed them. That means we need to be thoughtful about where data comes from, how it’s collected, and how it’s protected. I’ll discuss real-world techniques like getting clear consent from users, only collecting what’s truly needed, and using tools like differential privacy to keep personal information safe. Regularly checking data for bias and errors is another key step to make sure AI decisions are fair and reliable.

But it’s not just about the data. I’ll also share ways to make AI systems more understandable and accountable—from building models that can explain their decisions, to setting up processes for humans to review and override automated choices when needed.

Finally, I’ll highlight why it’s so important to keep the conversation open with everyone involved—users, communities, and stakeholders. By listening to feedback and being transparent about what AI can and can’t do, we can build systems that genuinely earn people’s trust.

Through stories, examples, and lessons learned, this talk will offer practical advice for anyone who wants to help create AI that’s not just cutting-edge, but also ethical and trustworthy.

Biography:

Pallishree Panigrahi is the Head of Data at Amazon Key, where she leads global data strategy, engineering, and analytics to power Amazon’s smart access and delivery products. With over 15 years of experience at Amazon, Meta, and other industry-leading organizations, she has built data platforms that drive innovation, operational excellence, and customer trust at scale.

Her work enables predictive intelligence, real-time feedback systems, and data products that support faster decisions and more human-centered technology. Recognized for bridging technical depth with ethical clarity, Pallishree is a leading voice on responsible AI, data governance, and inclusive innovation.

She mentors women in tech, speaks on building accountable AI systems, and champions a future where data serves both business and society. Based in the Bay Area, Pallishree brings a rare combination of strategic vision and technical expertise to shaping systems people can trust.

Email:

Rong Wang and Sushma Venkatesh Reddy of Intel

The First Enablement of GPU On-Device LLMs on Intel Chromebook Platforms

Chromebook running with a browser-based OS has been naturally leveraging cloud-based AI solutions as the main LLM use case support. As an attempt to deliver a more cost-effective Chromebook Plus device with advanced AI features and leading power and performance efficiency, we partnered with Google and enabled the on-device LLMs for the first time on Gen12 GPU. In this presentation, we will talk about the challenges, solutions, optimizations and evolution of GPU on-device LLMs on Intel Chromebook platforms.

This presentation will start with an introduction of the ChromeOS GPU on-device AI software stack and challenges with the key components of Vulkan-only graphics support, WebGPU Dawn framework, and Google’s inferencing framework. Following the software architecture, we will talk about our joint efforts with Google to leverage the capabilities of various Intellectual Properties (IPs) to achieve the best end-to-end power and performance for the new Chromebook Plus products. Details will be presented on how we profile our hardware and software, identify optimizations, improve various open-source software components including mesa, Dawn, and how we analyze and optimize shaders to overcome the unique challenges on Vulkan compute path.

The first GPU on-device LLM was successfully enabled on the Chrome Mainstream segment devices. With the evolving AI models and ChromeOS, we will conclude the presentation with an overview and forward looking of ChromeOS transition to Android-based OS and how this will impact future on-device LLM enablement on Chrome products across all segments.

Biography:

Rong Wang is a Principal Engineer at Intel with a track record of product software architecture and integration across multiple OS and platforms. She is the software architect leading the next generation ChromeOS migration on Intel platforms. She is also the domain technical lead for Chrome graphics, media and display. Since she joined Intel in 2015, Rong has been leading Intel engineering teams and partnering with Google to deliver key differentiation technologies on Intel Chromebooks to maintain competitive Chrome software and product roadmaps. Before joining Intel, she had been working in Bay Area California as a software architect and full-stack developer for kernel, driver, middleware and application design/development on Windows and Linux, including GPU/multi-core development using Nvidia CUDA, OpenCL and OpenMP.

Sushma Venkatesh Reddy is a GPU Software Development Engineer at Intel Corporation, where she contributes to open-source development in the Mesa 3D Graphics Library. She holds a Master’s degree in Information Technology from the University of North Carolina at Charlotte. Since joining Intel, Sushma has worked on Chrome OS GPU integration, focusing on graphics and media, and has driven initiatives to enhance GPU power and performance - key enablers for AI workloads. Her work plays a key role in advancing the capabilities of on-device AI and graphics technologies.

Disha Ahuja of Cisco

Multi-Agentic Synthetic Data Generation for Realistic, Multi-Turn Conversations

We present a graph-based, multi-agentic framework for generating high-fidelity synthetic conversational data tailored to the development and evaluation of LLM-based assistant systems. Built on LangGraph, our workflow orchestrates two REACT agents—each with distinct personas—engaged in extended, multi-turn dialogues. By structuring conversations as dynamic agent interactions enriched with retrieval tools, context validation, and humanization steps (e.g., colloquialisms, typos), we produce diverse, scenario-driven transcripts that closely mimic real-world user–assistant interactions. This approach goes beyond static or single-turn synthetic methods by capturing the fluid, context-rich nature of natural conversations.

Our method enables scalable generation of realistic, high-variance datasets that are critical for training, fine-tuning, and stress-testing dialog systems in applied settings. We will detail the system design, persona construction, graph orchestration, and technical challenges encountered, offering practical insights into building robust synthetic data pipelines for next-generation AI assistants.

Biography:

Disha is a seasoned AI/ML leader with over 18 years of experience delivering impactful solutions across enterprise, consumer technology, and autonomous driving. As the head of Cisco’s GenAI ML team, she drives innovation by developing multi-agent systems that power AI Assistants and products across Cisco’s portfolio. At Cisco, Disha is at the forefront of integrating advanced, domain-trained models and real-time telemetry into specialized, collaborative AI systems. Her team is pioneering the shift from traditional automation to intelligent, explainable, human-in-the-loop solutions that reason, recommend, and remediate—all while prioritizing transparency and empowering human decision-makers. Disha is passionate about solving complex machine learning challenges and shaping the future of proactive, agentic AI, helping organizations unlock new levels of efficiency and insight. Disha has successfully shipped more than 20 machine learning solutions and holds 15+ patents spanning mobile, cloud, and automotive domains.

Email:

Shubhi Asthana of IBM

ReAct Prompt Design: Enhancing LLM Reasoning & Tool Use

IBM is advancing GenAI with ReAct Prompting, a powerful technique that enhances Large Language Models (LLMs) by combining structured reasoning with dynamic tool use. This approach improves decision-making, reduces hallucinations, and enables more reliable AI interactions in retrieval-augmented generation (RAG) and task automation. Learn how ReAct transforms AI workflows, optimizes tool execution, and overcomes key challenges to build smarter, more interpretable LLM systems.

Biography:

Shubhi Asthana is a Senior Research Engineer at IBM Research’s Almaden Center, where she develops robust debugging frameworks for agentic AI and privacy guardrails. She spearheads the design and deployment of tools that dramatically improve prompt reliability and curb hallucinations in LLM agents—key components of the IBM Granite platform. Her broader work spans multimodal PII detection across text, audio, and images; adaptive prompt guardrails; and leading benchmarks to evaluate enterprise‑grade LLMs in finance, climate, and cybersecurity. Shubhi holds over 20 patents and has co‑authored more than 40 publications in top venues such as AAAI, NAACL, NeurIPS, and ICAIF.

Email:

Agenda

4:00-4:10 IEEE SCV WIE & Santa Clara WIN Welcome Message

4.55-5.25 The LLM Imperative: Secure Your AI Frontier Before It Fractures Your Enterprise - By Jagruti Mahante & Shreya Anand, Workday

5:25-5:55 Designing Data Centers for Next-Gen Language Models - By Jesmin Jahan Tithi, Intel

5:55-6:30 Networking and Refreshments

6:30-6:50 ReAct Prompt Design: Enhancing LLM Reasoning & Tool Use - By Shubhi Asthana, IBM

6:55-7:15 AI Powered SaaS on GCP - By Harika Rama Tulasi Karatapu, Google

7:20-7:40 Designing for Trust: Responsible AI from Data to Deployment - By Pallishree Panigrahi, Amazon

7:45-8:05 The First Enablement of GPU On-Device LLMs on Intel Chromebook Platforms - By Rong Wang and Sushma Venkatesh Reddy,

8:05-8:10 Final Wrap-up